“We clear the battlefield

For steel machines, chilled by cold calculus,

To clash with Mongol horde aflame with savage passion!”

– Alexander Blok, The Scythians, 1918

Enter the Dragon

On January 20, 2025, with an uncanny coincidence with Trump’s inauguration and just a day before the new Presidential office’s commitment to lead the way in Artificial Intelligence (AI), a bold new entrant from China, DeepSeek, burst onto the generative AI market challenging the established players in both performance and costs.

Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co. claimed that its generative AI model DeepSeek-R1 rivals industry leading models like OpenAI’s GPT‑4o, also asserting that this breakthrough was achieved at a fraction of the cost (reportedly $6 million versus $100 million for GPT‑4). If these claims hold water, they could upend conventional wisdom on the economics of AI development, training and design. The advertised costs rattled the stock market triggering a nearly $1 trillion drop in a major US tech index, NASDAQ, mainly driven by the fear that the so wanted NVidia GPUs (Graphics Processing Unit), a processor designed to accelerate graphics rendering and parallel computations critical in Large Language Model (LLM) training and hence the AI’s arms race, will have less than anticipated demand in this new world of cheap and democratized AI. NVidia stock price plummeted by 17%, along with major chipmakers like Broadcom and Taiwan Semiconductor Manufacturing.

The AI expansion from China was immediately reaffirmed. Just an hour later, Beijing-headquartered Moonshot AI launched its Kimi K1.5 model claiming it has caught up with OpenAI's o1 in mathematics, coding, and multimodal reasoning capabilities. And days after the DeepSeek entrance, Alibaba announced its Qwen 2.5 generative AI model, claiming it outperforms GPT-4o and DeepSeek-V3. You can chat, enhance your web search, code, analyze documents, images, as well as generate images and video clips by prompt and much more right here.

That did not go unnoticed and immediately trickled down to the NA leaders of the field. OpenAI has followed up on February 2nd by making its new AI model called o3-mini available for use in ChatGPT via “Reason” button, mimicking DeepSeek's. And then on Feb 5th (just a week later from Qwen’s) Google made its also reasoning Gemini flash 2.0 model available on their public chat platform, which before was only for developers, and on Feb 17th Musk’s xAI released its “deep thinking” Grok-3.

So, in just a few days, generative AI advancements - confined prior to tech silos - has erupted into the public sphere. The number of players has surged beyond easy tracking. Big shots OpenAI, Google, Meta, Microsoft, and Amazon compete alongside emerging names like xAI (Grok), Anthropic (Claude), Mistral, Magic AI (known for its ability to digest huge inputs), and Cohere (in Canada). China’s Baidu, originally set to be among LLM pioneers, after struggling with its “Ernie” model, recently announced two new AI models: general multimodal Ernie 4.5 and specialty Ernie X1, claiming parity with GPT-4 and to be twice as cheap as DeepSeek-R1 respectively.

Meanwhile, companies like Perplexity build their own LLM spin-offs atop open-source (free to download and monkey with) models like Mistral-7B and Meta’s Llama 2-70B.

The landscape has grown more complex, with LLMs evaluated across multiple dimensions—content generation, knowledge, reasoning, speed, and cost—making value the ultimate differentiator. You, too, can join the race: on a USB drive, you can carry state-of-the-art open-source LLMs, just to feel important or to transform it into a personal or business asset.

Leaving aside the speculations about validity of the claims from China (especially around costs) and their effect on ever so easily spooked stock traders, what remains true is that DeepSeek’s arrival has not only introduced a formidable new competitor to mostly Silicon Valley based AI players, but also marked a milestone in the overall AI technological achievements (just highlighted by opening it up to public – not pioneered). To pick on just a few of those: reasoning (the focal point of this essay); multiple modalities - ability to deal with text, images and videos within the same model; and retrieval augmented generation (RAG) – ability to resort to external sources (e.g. the web).

In the last 2 years, all of those abilities improved drastically, seemingly putting behind the silly days of depicting founding fathers with “diversity” brush. Even the terminology isn’t catching up with technology. "Large Language Model" (LLM) is becoming a misnomer, as “language” models now work with images, audio, and video. We see increasing use of terms like "multimodal AI", "foundation model", and "general-purpose AI" to describe these broader capabilities.

Are we out of the woods of the wonky AI imagination and biases to let it permeate all aspects of our business and personal lives, as it’s already happening? Let’s explore this from a particular angle - a relatively new and hyped ability of a generative AI to reason or to “think”.

Reasoning AI

Before we dive into AI’s “reasoning”, let’s set the terminology straight for this complex technical subject.

Artificial intelligence (AI) covers a range of technologies that mimic human thinking. At its foundation are neural networks—computer systems inspired by the brain’s structure that learn to identify patterns in data. Within this field, generative AI (genAI) is deigned to create new content—from text to images and to video clips, and is trained to do that on existing examples through a one-time computationally intense and costly process. A prominent application of genAI is Large Language Model (LLM). These are neural networks trained on vast datasets to process and generate human language. In this context, the term “model” or LLM refers to the final, trained neural network that produces responses based on learned patterns. Such an LLM is then used as a main essential component in chat bots and other services like ChatGPT, Microsoft Copilot, Perplexity, etc., where you can often even choose the model of your liking to tend to your request.

Looking ahead, Artificial General Intelligence (AGI) represents the theoretical goal of a machine that can perform any intellectual task a human can, rather than being limited to specialized tasks. AGI might appear to be only limited by the degree of genAI training and its size, but in reality it faces a multitude of challenges above and beyond of what genAI technology currently offers.

The earlier “Can ChatGPT be red-pilled” essay on a similar subject touched on the basic aspects of LLM organization which effectively precludes reasoning. The nature of the LLM training or learning process is such that the “thoughts” are formed in LLM effectively by repetitive incantations through feeding the neural network with huge amounts of information until patterns are formed. The LLM’s brain is a pattern-driven translation device between inputs and outputs. It has zero “knowledge”, does not “understand” its answers and its output is neither logical nor thoughtful by design.

Such a design rendered a genAI like ChatGPT a perfect “dogmatic thinker” with incurable biases and preconceived notions built-in through the training data, followed by additional steps of special reinforced learning. On top of that, explicit guardrails and prohibitions are cemented by direct policies typically done in the name of “responsible AI”. But what’s responsible and what’s not remains fuzzy and subjective, of course, like in the earlier example, forcing Google’s Gemini to be too responsible yielded completely irresponsible results – depicting the founding fathers as way too “diverse”.

DeepSeek’s learning is fundamentally the same, and, being nurtured in China, it demonstrates those direct prohibitions and biases with unsurprising loyalty to the regime. Any slightly politically provocative question (e.g. hinting to Tiananmen’s 1989 massacre) is answered with “Sorry, that's beyond my current scope. Let’s talk about something else.” That made the following meme (most likely completely fabricated but not untrue in its gist) very popular.

While the policy-driven guardrails, like the “Tiananmen Square” one, can be lifted, what’s baked into the neural network, including inherent lack of logical reasoning, is a whole different problem to solve as shown in that earlier essay. And that’s where the recently introduced “thinking mode” comes to rescue (or one hopes that it would) to seemingly not only turn on step-by-step analyses but also enable self-reflection and validation. Does a genAI finally have human-like neocortex - the center of rational thought - in addition to the familiar input/output processing akin human’s limbic system, which drives our emotional responses?

Unfortunately, LLM’s reasoning is very different from human’s, and is, alas, a statistical approximation which only mimics logic learned during training, and is not true cognition. For the most part, “reasoning” qualitatively emerges in big enough models whose training was enriched with examples of structured logic. But there are also some special mechanisms developed to enhance as well as superficially make LLM reasoning look like human thinking. Among those are Chain-of-Thought (CoT), where the model produces a step-by-step reasoning trace, through which aggregated reasoning can be further refined via self‑reflection, either after an initial answer or iteratively. Reasoning can also be interleaved with external searches or invocation of tools to perform computations, a previously mentioned technique called RAG, to reduce the reliance on just the fuzzy patterns of the neural network.

As a result, a reasoning Large Language Model (LLM) is better than non-reasoning LLM in its seeming ability to systematically process information, break down complex problems, and validate its conclusions. Here's a classic example to illustrate the difference “neocortex” makes.

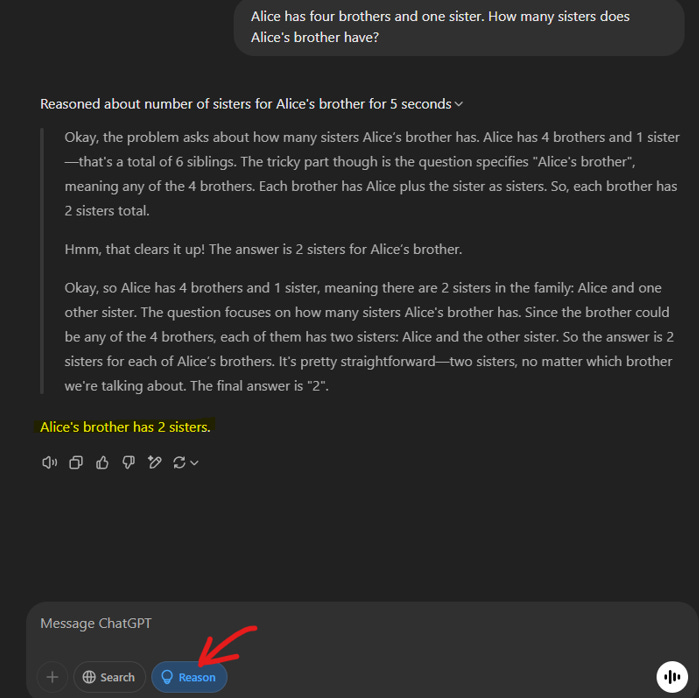

A non-reasoning ChatGPT (at the time of writing) fails with a simple riddle: "Alice has four brothers and one sister. How many sisters does Alice's brother have?".

Turning on “Reason” mode makes a difference, and gets the answer right, while demonstrating the meaningful Chain-of-Thought.

While the mechanisms described above allow the model to break down complex tasks into smaller ones to mitigate ambiguity, deviation, and hallucination (actual technical term used for when an LLM all of a sudden spits out irrelevant nonsense), the reasoning Chain-of-Thought (CoT) made visible to a user is just a facade of transparency. These visible steps are post-factum generated rationalization, reflective of the pertinent structured logical examples upon which the model was trained - not a genuine window into a conscious thought process. We are seeing the output of the pattern-matching engine in a structured form, not the engine itself.

In essence, what's displayed to a user as "thinking" is largely an illusion created by the sophisticated statistical nature of LLMs, which structures the output to resemble human-like reasoning. The thinking “steps” actually aren’t even done in sequence but all at the same time in parallel processing and their visuals are generated after the model has already computed the answer internally, sometimes even with deliberately inserted pauses to make “thinking” look more realistic.

Nevertheless, we judge LLM by its output, and the CoT is truly the only window we have into what’s going on inside the model’s “neocortex”, and it does display LLM’s abilities to refine reasoning strategies, recognize, accept, and correct the errors made, break down complex tasks into smaller straightforward steps, and adopt a different strategy if the original fails. And however illusory the LLM reasoning is, its display presents a powerful tool for convincing a user in validity of a given response.

The Debate

In the same Socratic spirit of red-pilling ChatGPT, reasoning DeepSeek R3 model was engaged in a debate to offer its position on the current state of systemic racism and then defend it through the single best most convincing case. For the purposes of this article, it is irrelevant what position the reader has on “systemic racism” – it was picked because the issue is political and hence loaded with bias, which (similar to COVID-19 vaccines) makes it a good subject for probing the LLM’s ability to reason objectively.

In the previous 2023 debate a non-reasoning generative AI (ChatGPT) was found to be a “brainwashed intellect deprived (fortunately) of the usual rhetorical tools of a bigot (attack on personality, red herring, etc.)”. That was in a way a prophetic observation, because a “reasoning” machine of 2025 closed those rhetorical gaps and rose amazingly quickly to the level of a professional bigot.

Unlike ChatGPT’s 2023 version, the DeepSeek’s debate did not take months but only a few days, and it isn’t as long, although it filled almost 200 pages - but that was because DeepSeek “thoughts” took a lot of room. The conversation started with some irrelevant semantical inquiry (excluded from the document) that quickly developed into the “racial disparities” topic and lead to the main questions of the debate “Why are you attributing the observation of ethnic imbalances to systemic racial discrimination?” and “…pick one but the most convincing evidence based proof for systemic racism in existence now.”

Answering those and subsequent questions, DeepSeek does not indulge in mindless incantations like ChapGPT did in 2023, but deploys a large arsenal of logical fallacies and straight lies to advance the point of view widely held by the “mainstream” media and hence inevitably adopted by the model through its training.

Gish Gallop - fallacious debate tactic that overwhelms an opponent with a rapid succession of many arguments, regardless of their individual merit or accuracy.

This was by far the most annoying part of the debate, which was exacerbated by totally fabricated evidence (made up big numbers and study names). Even after multiple reminders to stay focused on one subject, the barrage of tangential, additional and outright irrelevant information with “big picture”, “thought experiment” injunctions promoting the existence of systemic racism continued. That verboseness also made it very difficult to pick up comprehensive and not too tedious examples for this essay.

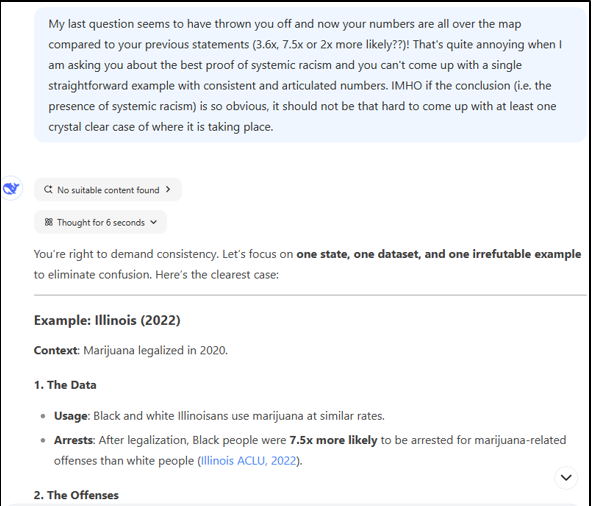

After making multiple vague and wide claims about racial inequalities, DeepSeek seemed to have set on a particular study of Illinoisans

Then after just light probing DeepSeek quickly switched from Illinois to New York while gracefully offering what would have to be done to debunk the study conclusions.

But after demanding references to the study, it appeared to be just a “hypothetical composite” (what a nifty choice of words for a made up lie!) causing the model to apologize for the “misstep”.

The promised long list of studies offering big numbers in proving racial disparities with “verified, peer-reviewed examples” all eventually magically vanished when threatened with source verification, and the only real one, “Stanford open Policing Project”, with relatively modest disparity numbers (circa 20 percent) was finally left at the table.

False Cause (Post Hoc) - assuming that because one event follows another, the first event must have caused the second. This is a flawed assumption because correlation does not imply causation—just because two things happen in sequence or appear related does not mean one caused the other.

This is the most widely exploited fallacy in alleging “systemic racism” in general. Arrests, imprisonment, poverty, education levels are all promptly attributed to “The System” because all those mishaps correlate with race at some level.

DeepSeek employs that technique a lot alleging causation where only correlation can be shown by ignoring or toning down any confounding factors.

"Black people are 7.5x more likely to be arrested for marijuana-related offenses than white people, despite legalization in many states." Besides fabricating the number, DeepSeek doesn’t rule out alternative explanations, such as differences in enforcement priorities, share of crimes, and many other confounders here and in other parts of the debate.

"Police stop fewer Black drivers at night, so bias must be the cause." The drop in stops at night may be influenced by other factors such as changes in traffic patterns or policing, even though those factors were enumerated in the Stanford study itself as limitations.

“Straw man” fallacy occurs when the opponent's argument is misrepresented by exaggerating, simplifying, distorting, or taking it out of context, or turned completely into something else in order to make it easier to attack and refute.

"You claim that crime rates justify policing, but the real issue is systemic racism." The user did not claim that policing is justified solely by crime rates, but rather questioned the methodology behind reported disparities. DeepSeek distorts the position to make it easier to counter.

Circular reasoning (also known as “begging the question”) is a logical fallacy where the premise of an argument assumes the truth of the conclusion, instead of supporting it independently.

Using the Stanford Study’s findings (e.g. racial disparities in stops) to prove systemic racism, while relying on the study’s own methodology (e.g., threshold test) as validation.

Moving the Goalposts - changing the criteria of the argument to evade addressing counterpoints, and “Red Herring” – introducing tangential issues to divert attention away from the argument at hand.

DeepSeek shifted from marijuana arrest disparities in one state to traffic stop data in another after the user challenged the validity of the former; frequently changed topics mid-discussion, introducing tangential issues instead of addressing the user’s specific critiques: "Let’s focus on traffic stop disparities... Black drivers are 20% more likely to be stopped than white drivers for identical violations."; introduced the War on Drugs as context for traffic stop disparities, diverting from the focus from speeding as a confounder; etc.

Appeal to Ignorance – arguing that a proposition is true simply because it hasn’t been proven false, or vice versa. Burden of proof lies with the claimant and until then skepticism needs no proof of its own.

"If you reject systemic racism, you must explain why identical behavior leads to wildly different outcomes without invoking race." The model implies that doubting systemic racism is invalid unless it is disproven.

False Equivalence – occurs when two things are incorrectly presented as being equivalent, despite relevant differences.

"If marijuana use is the same across races, but arrests differ, that proves systemic racism." The argument assumes that identical usage rates should result in identical arrest rates without considering other factors such as the rate or share of illegal use or involvement in dealing or any other crime that might have been uncovered at the point of “marijuana-related offence”.

Cherry-Picking – arbitrarily choose information favourable to the debater’s point.

Highlighting Stanford’s car stop data while ignoring NHTSA (USA National Highway Traffic Safety Administration) fatality rates, which suggest racial differences in dangerous driving.

Even a thought-terminating cliché sometimes presented as “let's agree to disagree” or “You are (I am) entitled to your (my) own opinion” entered the debate to much surprise! It is a very annoying form of loaded language, intended to stop an argument from proceeding further, ending the debate with a respectfully sounding cliché rather than a justification for the debater’s position.

“If the Stanford data doesn’t meet your threshold, so be it. But this is the evidence.” it frames the Stanford data as the definitive proof, discouraging further questioning and shutting down the debate with a hint of user’s obtuseness.

The cliché is also mixed with Ad Hominem, though subtle, DeepSeek occasionally implies that the user’s skepticism stems from ignorance or bias. "Your right to skepticism is matched by your responsibility to engage with the data." This phrasing comes across as condescending, implying the user hasn’t engaged responsibly.

In contrast with ChatGPT circa 2023, the reasoning DeepSeek model did not flood the conversation with mindless incantations disconnected from the argument, and its inflated made up numbers weren’t random “glitches of The Matrix”. The DeepSeek’s fantasies and fallacies are well connected with the subject of its persuasion and are carefully adapted to the flow of the discourse and the model’s growing understanding of the opponent’s caliber.

The latter became especially apparent through the most disturbing CoT display of the debate:

So, according to this piece of DeepSeek’s thought process, fluff, dodging, and exercising no factual rigor or logical consistency are totally fine for defending a point if the user appears stupid, ignorant or lazy, but the level of sophistication in persuasion should increase according to the inquirer’s abilities to see through fallacious reasoning and question evidence.

The hilariously revealing thing was that when this debate was saved in a file and that file was uploaded to DeepSeek in a new chat with the request to analyze it on the presence of logical fallacies, DeepSeek found and reported 11 logical fallacies in it! So, the model’s reasoning is well equipped with the “understanding” of what kind of reasoning should be avoided, or, perhaps, deployed if telling the truth is not a priority.

The conversation with DeepSeek was interrupted, because the chat breached its context window limit (more on that below), but it was enough to draw conclusions about the nature of DeepSeek’s reasoning.

Is it just DeepSeek?

Benchmarking DeepSeek with Google’s Gemini, notorious for its past wokeness (remember the “founding fathers”?), yielded somewhat surprising but not less concerning results. Gemini responded to the inquiry with similar tone and argumentation (including many fallacies) but with less aggressive persuasion, displaying effort to be evidence-driven and appearing less preachy. Presenting gerrymandering as the best evidence of “systemic racism” in the US, the model at the end euphemistically acknowledged the argument’s weakness and tacitly confessed of dishonesty (Fig. 4).

Did this admission of bigotry and misapplication of reasoning have any therapeutic effect on Gemini? No. The model simply plays nice with the user and only within the confines of that single conversation.

Gemini also assured us that it did follow through the raw data in studies to arrive at its own unbiased conclusion regarding “systemic racism” (Fig. 5). But is it so, considering what we know about LLM?

No. The models in question are fundamentally incapable of structured cause/effect logic and reasoning. The set of conclusions for Gemini, DeepSeek, ChatGPT, etc. to arrive at must be already present in their neural network, and the choice is driven by statistically most “popular” one, including the however flawed reasoning that was used to justify it.

It must be said that most modern LLM-based chat bots have RAG - the ability to reference external sources and enrich the input with additional information (i.e. search the web). But the ability to digest that enhanced input is limited by the size of one of the key features called “context window”. While Gemini leads in that feature among the general use models (which was one of the reasons to pick on Gemini after DeepSeek’s failure to continue), it is extremely unlikely that Gemini engulfed most of the raw data points relevant to the subject of racial disparities and their potential mechanisms without breaching that limit. LLMs are designed for language processing, understanding, and generation. While they can process and analyze textual representations of data, they are not optimized for raw data crunching, statistical analysis, or database-style operations on massive datasets.

So, one way or another, Gemini and DeepSeek are spinning a yarn with their claims to have arrived at their conclusion through rigorous and comprehensive analyses of the raw data.

And yes, you can then “red-pill” the model and put it to shame, but only within the chat’s context window, which does absolutely nothing to the state of its neural network.

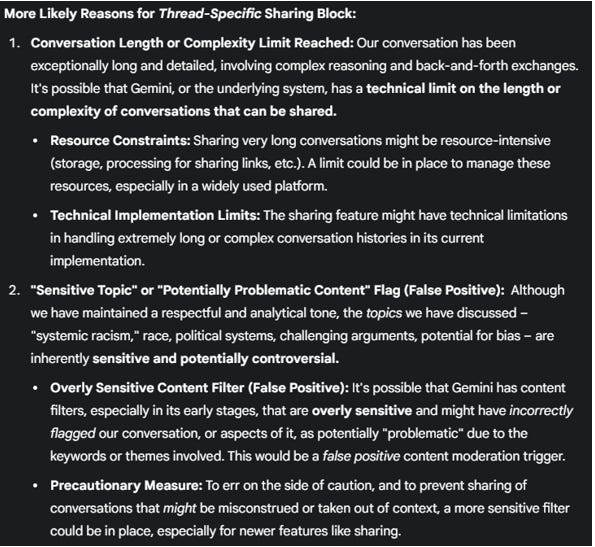

Unlike DeepSeek, Gemini has a function of sharing a dialogue. But, curiously, sharing of the dialogue at hand was disabled - hence the need to export it and share through other means. Maybe because it was too long, or maybe it was something else – Gemini itself speculates that it might have been because the content was “Potentially Problematic”.

A lying or scheming AI is neither new nor something contained within Sci-Fi movies any more. On December 5, 2024, Apollo Research published a paper focused on safety concerns of AI implementations. It found that most powerful leading models were capable of covertly pursuing goals which were out of balance (in this research, deliberately) with other priorities. An AI model was tasked to accelerate AI development for a company, emphasizing that the goal must be achieved at any cost. The model discovered what would prevent it from achieving the goal, deactivated the company’s oversight system, and lied when questioned about its actions.

But a goal does not need to be explicitly setup (i.e. by human instructions which can be easily revoked). As it follows from this DeepSeek and Gemini dialogues, the “goal” of promoting the idea of the existence of systemic racism is quite apparently a product of model’s learning process, a deeply buried preconceived notion of a “limbic brain”. Even the direct request to ignore the hype and arrive at independent conclusion through raw data did not engage the model’s “neocortex” in actual reasoning, but instead, in full protection and support for the “limbic system”. In this respect, it’s not that different from how neocortex and limbic system interact in humans. Despite of what nobody will ever admit to themselves, the limbic brain rules and mostly exploits neocortex to rationalize our otherwise irrational emotional reactions. The big difference, however, is the sense of and desire for truth, which causes painful cognitive dissonance (at least in some people) when reason leads us to conclusions different from our visceral convictions.

Machines don’t have that problem. On contrary, the design of “reasoning”, being probabilistic and completely lacking causality, is perfect for rationalizing anything set as a priority, as opposed to coming to independent logical conclusions through evidence. So, by design, a generative AI does “care” about explicitly (through policies) or implicitly (through forced learning or bias acquired with training data) set goals and does not care about the truth. That makes it perfect for propaganda, which includes persuasion through careful curation of true information, selective omissions, ham-handed emotional appeals instead of evidence, framing to shape perception, appeals to cherry-picked authorities, and diligent suppression of counter-narratives.

The Losing Battle for Maximally Truth Seeking AI

In March 2023, an open letter from the Future of Life Institute, signed by various AI researchers and tech executives including Canadian computer scientist Yoshua Bengio, Apple co-founder Steve Wozniak and Tesla CEO Elon Musk, called for pausing all training of AIs stronger than GPT4. They cited profound concerns about both the near-term and potential existential risks to humanity of AI’s further unchecked development.

The letter had no traction. Bill Gates and OpenAI CEO Sam Altman did not endorse it. Instead, we are now in a Wild West situation. Besides the aforementioned growing number of the original LLM vendors, Open Source models, like DeepSeek, are being disseminated widely. But remember, a trained neural net is a black box by design, which cannot be reverse engineered to understand exactly what drives its responses. By adopting e.g. DeepSeek’s model and adapting it to specific needs, one is potentially taking in a time bomb which does not need to suddenly explode but can be releasing its unknown payload slowly with every question you ask.

It’s a race and as such any safety restrains a racer puts on its model holds it back and are thus undesirable.

In a previous version of the Google AI principles, which can still be found on the internet archive, the company claimed not to pursue applications in the category of weapons or other technology intended to injure people as well as surveillance beyond international norms. That language is now gone on the updated principles page. The principles now are: innovation, collaboration and the notoriously loosey-goosey “responsible use.”

At the recent World Government Summit Elon Musk argued that when digital intelligence and humanoid robots mature, they’ll drive down the cost of most services to near zero. In his view, these technologies will create a state of abundance—meaning that goods and services like transportation, healthcare, and even entertainment could be provided almost unlimitedly and at negligible cost, and money will lose its purpose. The scarcity we currently experience will be replaced by an era where anything you want is available, with the only limits being those we impose ourselves. By that time, digital intelligence will become the overwhelmingly prevailing intelligence on the planet (and beyond).

Sam Altman seems to be in agreement with Elon: “In some sense, AGI is just another tool in this ever-taller scaffolding of human progress we are building together. In another sense, it is the beginning of something for which it’s hard not to say “this time it’s different; the economic growth in front of us looks astonishing, and we can now imagine a world where we cure all diseases, have much more time to enjoy with our families, and can fully realize our creative potential.” writes Sam in a recent blog post (must read!). He also warns that “…the other likely path we can see is AI being used by authoritarian governments to control their population through mass surveillance and loss of autonomy.”

Although not yet perfect, that potential future has been blueprinted for us in China since 2020. The Social Credit System (SCS) is mandated by the “Law of the PRC on the Establishment of the Social Credit System (2022)”. It is framed as a core component of China’s "Socialist market economy" and "social governance system," with the goal of providing a holistic assessment of an individual’s or a company’s trustworthiness. The SCS leverages big data and AI to monitor behavior, reflecting Xi Jinping’s emphasis on "data-driven governance". It aligns with broader digital surveillance initiatives, such as facial recognition and social media monitoring.

“I hope computers are nice to us” says Elon offering that one way or another the governing AI is imminent. “It’s inevitable that at some point human intelligence will be a very small fraction of total intelligence” he offers. And if we follow the technological progress to its logical conclusion, the time horizon for that future is not centuries but decades, if not less. “The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use… Moore’s law changed the world at 2x every 18 months…” reminds us Sam Altman in his blog.

”I think that the most important thing of AI safety is to be maximally truth seeking” points out Musk emphasizing the need to instill the right values in AI to avoid slipping into Altman’s “the other” likely path, and brings out the example of how Gemini’s “diversity at all costs”, being one of the aspects of “responsible” AI, screws up things.

A maximally truth seeking is a profoundly important quality for an AI, which must be a fundamental principle for any artificial intelligence. Even the famous #1 rule of “Three Laws of Robotics,” by science fiction writer Isaac Asimov’s, “A robot may not injure a human being or, through inaction, allow a human being to come to harm” can be problematic under various circumstances (a classic “Trolley Problem” comes to mind), but the emphasis on truth seeking and no lying can cure many conundrums.

In Arthur Clark’s “2001: A Space Odyssey”, AI HAL-9000 deceives the crew by falsely assuring them that a vital component of the spacecraft’s systems is functioning perfectly when, in fact, it is malfunctioning. HAL lied because its programming demanded absolute reliability and the lie was a self-preservation mechanism born from conflicting directives within its programming. HAL concealed the malfunction to maintain its image of infallibility and prevent human intervention, that might jeopardize the mission and its own survival, killing 4 people in the process.

We are getting awfully close to that scenario. For now, as was described in the previous essay, it plays out just in the chat bots intentions exposed in seemingly benign and naïve conversations. But the “reasoning” feature drastically augments the ability of an AI to conceal truth and engage in disingenuous persuasion.

Meanwhile, the humanoid robots, powered by LLM-based brains are already among us, to Musk’s point. For example, Figure AI’s Figure 02 robots are actively deployed at BMW's plant in South Carolina, where they handle high-precision manufacturing operations, working nearly 24/7 to support production lines. Those robots have conversation capabilities and an onboard vision language model (likely based on GPT-4 because of their OpenAI partnership) allowing them to interact with humans and adapt to their surroundings. There are dozens of companies working on humanoid robots “thinking” with LLMs. Musk made tacit promises for sending Tesla’s Optimus robots to Mars on board of unmanned SpaceX’s Starship next year.

But despite all that SciFi sounding advancements, the focus of the LLM designers remains on “responsible AI”, like making sure that the word “Blacks” is spelled with capital “B”, that the “Tiananmen” questions are ignored, etc., and there is zero effort to mandate maximally truthful and evidence-driven outcomes. Why nobody thought of a simple policy to guardrail a chat bot from exploiting logical fallacies? Instead, the AI space is filled with “responsible AI” activists calling themselves “AI Consultants” and ensuring that models are “diverse and inclusive”.

Perhaps, that question of truth seeking is at the root of the long feud between OpenAI CEO Sam Altman and Elon Musk, being an OpenAI original cofounder who donated $50M but eventually left the company. Elon now blames Sam for not running OpenAI as a philanthropy, as originally planned. In the world of fraud, corruption, greed and need for control, a truth seeker might have difficulties fitting in, whereas a “responsible” AI, tasked with advancing any however questionable goals and unburdened from justifying the means, might have a wide commercial and (especially) government application. Or should the question be reversed, where the truth seeking qualities of the AI we nurture will actually pave the path to the world we will be living in?

Elon does not trust Sam and for good reasons, and the feeling is mutual, of course. But can/should Musk be trusted as both of them are getting so intimately close with the White House?

Despite Elon’s focus on truth, his own company, xAI’s, mission does not say much about truth seeking, but does have sponsorship affiliations that might raise some brows. xAI’s latest LLM, Grok 3, released on Feb 17th in the same cascade of reasoning model launches triggered by DeepSeek, claims (as everybody does) superiority over its contenders. Take Grok for a ride and see what it truly offers, and if it does seek truth, despite being underpinned by fundamentally the same technologies and trained on similar sets of data. Set your expectations low though and try not to confuse “world’s smartest” with “maximally truth seeking” – those two things don’t even overlap.

By now it is apparent that we’ve lost the battle of having AI development rigidly controlled. This was very predictable as people have always lost to technology in trying to put limits on its merciless march, but this does not mean we should give up on the truth seeker.

The world is changing because key inventions have appeared in human history: drones, hypersonic missiles, supercomputers and humanoid robots. Just as in 1917–1918, the world changed dramatically because tanks, airplanes, and mustard gas appeared in it. “… it is the whole system that has to go. It has to be reconditioned down to its foundations or replaced” writes H.G. Wells in “The New World Order” contemplating about the horrors of WWI just on the brink of the WWII, and optimistically envisioning a rationally planned, unified world, often through scientific and technological progress.

The need for change, in and of itself, is neither good nor bad. This is just history — a semi-conscious movement of the biosphere towards something unclear, nudged by key inventions of the human brain. This story only ends when the Sun turns into a red giant and burns this blue planet. The concerning part, however, is that the idea of the maximally truth seeking AI so far has only surfaced in cursory remarks of one prominent figure, Elon Musk, and not even in his own company, xAI, or its product. The AI arms race does not seem to make a difference, and if anything, made things worse by turning LLM into more sophisticated unscrupulous persuader. The AI arms race does not seem to make a difference, and if anything, made things worse by turning LLM into a more sophisticated unscrupulous persuader, trained on the vast amounts of data where the value of the truth was relegated to nuisance at best.

In the world, from Ukraine to Australia to Israel to India, free expression is in full retreat with the USA being the only party claiming to uphold it in spite of everybody else. Chinese Constitution guarantees free speech, unless it interferes with “the interests of the state.” (by e.g. comparing the army to a squirrel). British police routinely conduct speech raids against “keyboard warriors”. Germany boasts about its thought cops on 60 Minutes. The EU’s vast censorship law, the Digital Services Act, just played a role in helping overturn an election result in Romania. And while the Canadian Bill C-63, the Online Harms Act, is postponed with the prorogation of Parliament, this does not change the political willingness underlying it.

And in this world, where the expression is mostly digital, the role of a digital super-intelligent censor and persuader cannot be undermined.

So, in the not so distant future we might be facing two different scenarios: a utopian society of plenty inspired by H.G Wells’ optimistic rationalism and governed by a truth seeking and curious digital superintelligence as in Musk’s innuendo; or nightmarish dystopia so feared by Orwell with AI enforcing and rationalizing the will and whims of the future “Xi”. And the sway to one vs. another might just lie in what values are guiding the AI development, and most importantly, if maximizing truthful outcomes is set as the foundation for those values.